Scaleway Kapsule Overview

May 4, 2020

kubernetes

scaleway

cloud

Kapsule is the Managed Kubernetes distribution of Scaleway (formerly Online Labs), a french cloud provider akin to Digital Ocean.

This article is an architecture review of the technical and design choice that were made. It goes fast and try to shred some light on the major intresting points skipping the basic stuff.

TLDR; If you are only intrested by the technical part, jump to the end

Provisionning

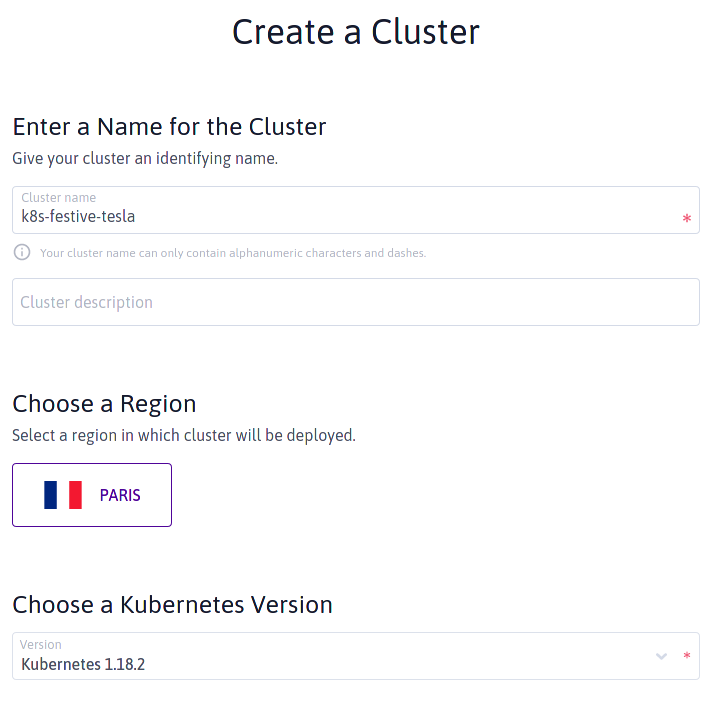

Cluster are provisionned though the Scaleway web console or api.

The control-plane services (kube-api-server, kube-scheduler, etcd) are not part of the cluster and so are not billed (directly) to the user. This is the same approach as the one used by GCP (before they annonced they will bill users for control-plane services starting june 2020)

Interface

Screenshot of the deployment interface. Only the PARIS region is available for Kubernetes.

The minimal cost of a cluster is €8/month (€0.016/hour) excluding taxes, using a single node with a DEV1-M instance composed of 3 cores (amd64), 4GB RAM and 40GB of local NVMe storage.

This a basically the same price as deploying the instance itself. You really get the k8s control-plane for free. Compute-wise at least.

Velocity

A few nodes cluster (1-3) takes under 5min to deploy, from button press to kubeconfig.

Kubernetes versions

At the time of this writing, the latest upstream version is v1.18.2 (with v1.19.0 being in alpha2). The following versions are available from Scaleway:

- v1.15.11

- v1.16.9

- v1.17.5

- v1.18.2

No unmaintained versions here like 1.12 or 1.13, only the latest patches, which can be an issue sometime when a feature you use is broken upstream. People looking to port old workloads are supposed to upgrade.

Autoscaling and resiliency

Scaleway allow automatic autoscaling of the cluster within a node limit, and auto-healing of nodes.

This is a basic check performed on the node that will reboot them if the kubelet is unhealthy for more than 15min, and replace them after 30min by spinning up a new node.

CNI and optional features

Available CNI are Cilium, Calico, Weave and flannel. You can choose to deploy an ingress on top, ingress-nginx or traefik.

Architecture and overall design

This is an out-of-cluster master design, meaning:

- you don’t have to manage

etcd(that one could be a relief) - you don’t have to manage certificates

- you don’t gave access to master nodes parameters (feature gates for example)

SSH is disabled on the worker nodes instances, but they are provisioned with an attached IPv4 and IPv6 /64.

CSI, PV and PVC

A block storage out-of-tree CSI driver is provided, along with two storage classes. The default reclaim policy is Delete. Be careful when deleting pods and deployments!

% kc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEEXPANSION

scw-bssd (default) csi.scaleway.com Delete false

scw-bssd-retain csi.scaleway.com Retain false

Sadly the is no volume expansion.

Technical details

Everything here is based on a v1.18.2, 2-worker-nodes cluster with ingress-nginx and cilium.

Default daemonsets

% kc get ds

NAMESPACE NAME DESIRED CURRENT NODE SELECTOR

kube-system cilium 2 2 <none>

kube-system csi-node 2 2 <none>

kube-system kube-proxy 2 2 <none>

kube-system nginx-ingress 2 2 <none>

kube-system node-problem-detector 2 2 <none>

Default pods

% kc get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS

kube-system cilium-jnljw 1/1 Running 0

kube-system cilium-mmjvk 1/1 Running 0

kube-system cilium-operator-84b66df75c-prqvp 1/1 Running 0

kube-system coredns-7489757d7-tlpkp 1/1 Running 0

kube-system csi-node-4g2gd 2/2 Running 0

kube-system csi-node-qr9zc 2/2 Running 0

kube-system kube-proxy-4zgvl 1/1 Running 0

kube-system kube-proxy-59clk 1/1 Running 0

kube-system metrics-server-9d5bcc584-hph7m 1/1 Running 0

kube-system nginx-ingress-nnxvn 1/1 Running 0

kube-system nginx-ingress-twtkt 1/1 Running 0

kube-system node-problem-detector-44dgp 1/1 Running 0

kube-system node-problem-detector-njgbb 1/1 Running 0

Service accounts and PSP

% kc get sa --all-namespaces -o wide

NAMESPACE NAME SECRETS AGE

default default 1 10m

kube-node-lease default 1 10m

kube-public default 1 10m

kube-system cilium 1 10m

kube-system cilium-operator 1 10m

kube-system coredns 1 10m

kube-system csi-node-sa 1 10m

kube-system default 1 10m

kube-system kube-proxy 1 10m

kube-system metrics-server 1 10m

kube-system nginx-ingress 1 10m

kube-system node-problem-detector 1 10m

% kc get psp --all-namespaces

No resources found.

Metrics and nodes

% kc top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

scw-k8s-clever-wright-234 109m 3% 721Mi 24%

scw-k8s-clever-wright-fa7 117m 4% 674Mi 23%

on a four node cluster, the kernel + kubelet + daemonset tax is nearly ~700Mio, or more than 20% of the allocated memory.

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

scw-k8s-clever-wright-default-234d104f0e2045da 166m 5% 992Mi 34%

scw-k8s-clever-wright-default-7317726a5df64669 102m 3% 669Mi 23%

scw-k8s-clever-wright-default-c821de8a86da47b3 102m 3% 671Mi 23%

scw-k8s-clever-wright-default-ec5259561f334432 89m 3% 672Mi 23%

% kc describe nodes

[...]

System Info:

Machine ID: 17733faa521042f8921d458f918a5539

System UUID: 17733faa-5210-42f8-921d-458f918a5539

Boot ID: 02b6453d-6a74-43f2-814d-008887799706

Kernel Version: 5.3.0-42-generic

OS Image: Ubuntu 18.04.3 LTS 0d484f1faf

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.5

Kubelet Version: v1.18.2

Kube-Proxy Version: v1.18.2

PodCIDR: 100.64.1.0/24

Ingress configuration

The default ingress instances binds the ports http/80 and https/443 on each nodes.

% kc describe -n kube-system pod nginx-ingress-twtkt

[...]

Ports: 80/TCP, 443/TCP

Host Ports: 80/TCP, 443/TCP

We are going to use that to setup ingress-nginx in a next post.